Hi all,

Let me take advantage of the “Elk Showcase” forum category and present you a little bit of the work I’m doing using the Elk Pi hat.

As some of you certainly know, I have researched sutainer / freeze / extrapolation / droning / call-them-what-you-like algorithms a bit last year, and eventually came up with a really simple and efficient time-domain algorithm for the task that works on all sorts of input signals (well, almost), guarantees no tonal coloration (on average) and produces non-static evolving output tones. I also wrote a scientific paper about it with the help of Dr. Leonardo Gabrielli from Università Politecnica dell Marche, Ancona, Italy, which was presented at the DAFx18 conference in Aveiro, Portugal, last year. Take a look at the paper if you wish: http://dafx2018.web.ua.pt/papers/DAFx2018_paper_11.pdf

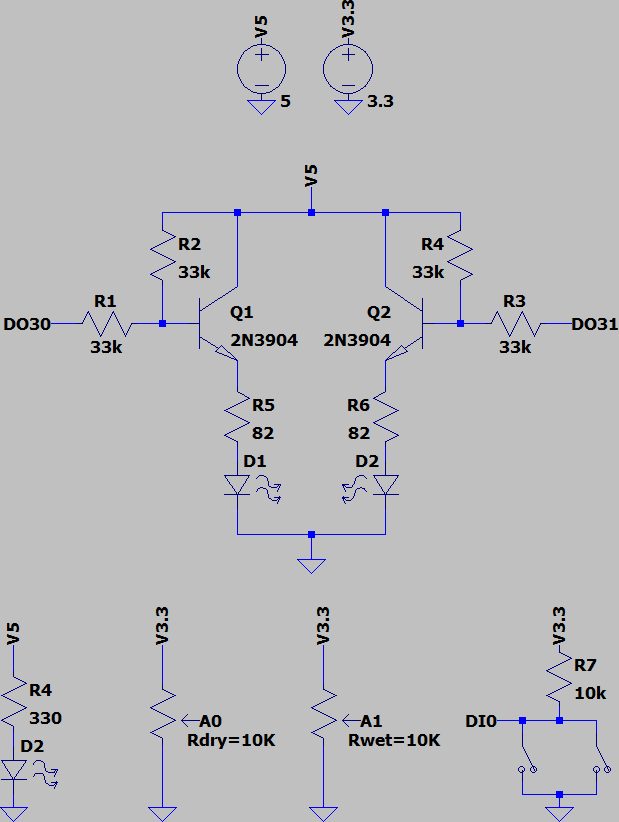

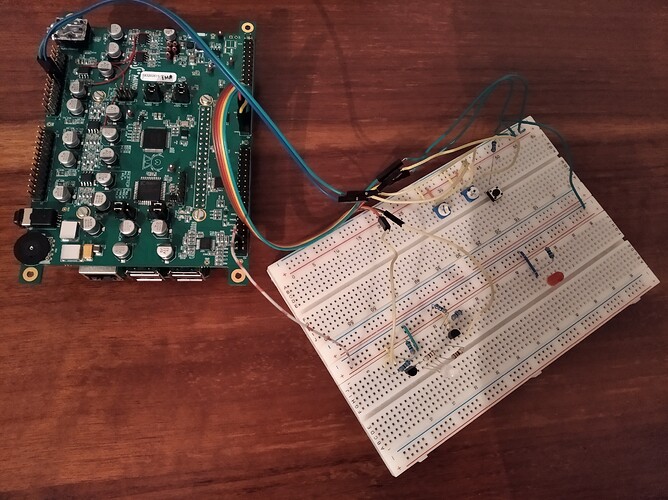

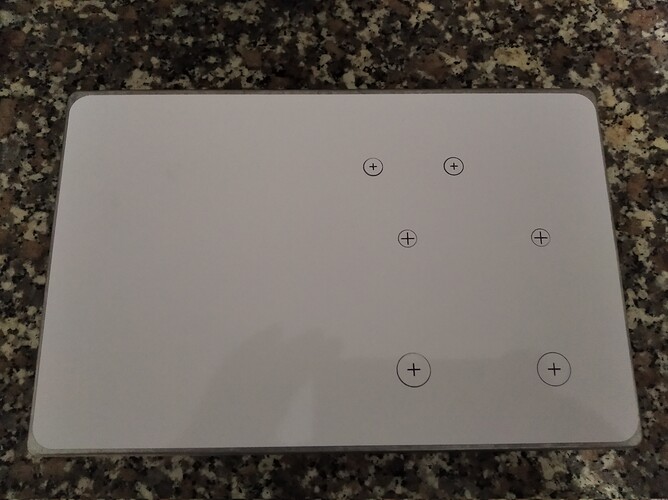

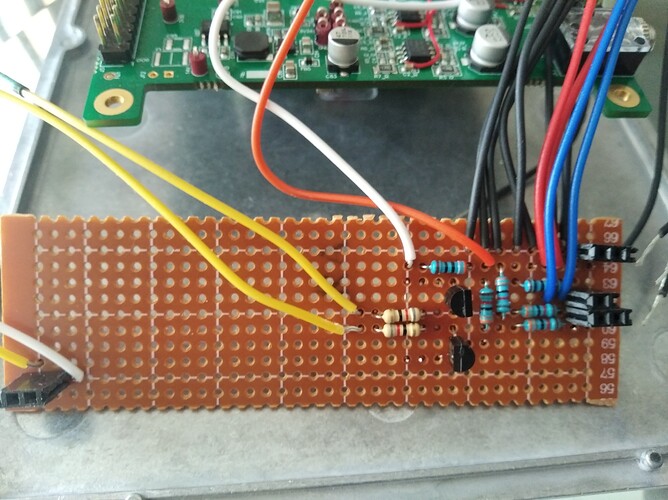

The Elk guys contacted me a few months back and asked me if I wanted to make a prototype of some device using their upcoming Elk Pi hat, and making a guitar pedal out of this algorithm was the obvious choice for me. At the moment I’ve completed the software part and I believe the hardware side of affairs is mostly done too. In order to avoid forgetting about this journey and also to provide a nice log for whoever cares enough, I’ll post from time to time about the development process in this forum thread.

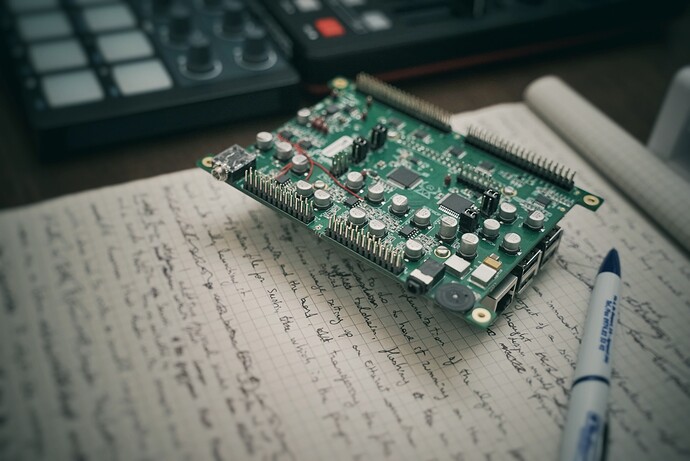

So, one morning I’ve received a shiny new Raspberry Pi 3, with power supply, SD card (that I soon discovered was broken), and Elk Pi hat board. Here’s the system assembled as per instructions that you can find now on github.

Once I had downloaded the Elk Audio OS image and flashed it on a working SD card, I could easily setup a shared Ethernet connection and login via SSH. The version of the image “at the time” (a few weeks ago) offered a standard login, but in the latest iterations there’s this nicer “textual logo”.

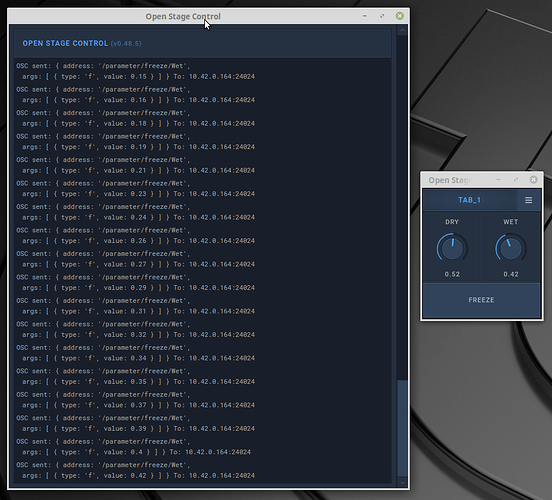

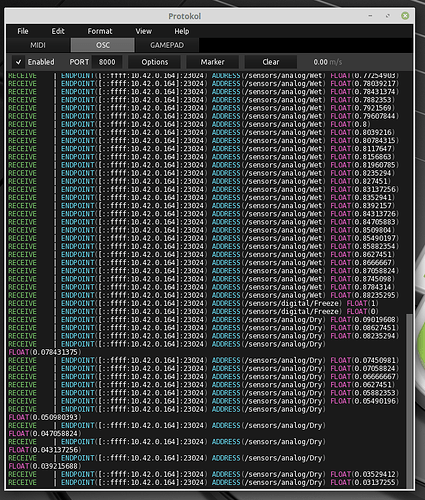

I already had the plugin written in VST3 format (without JUCE and the like, I started from the helloworld example in the VST SDK itself). It’s a pretty simple thing: you have two “continuous” parameters (dry and wet gains) and a “sustain” button that “stops” the sound. That’s all. Recompiling it for Elk was a breeze, I just needed to download the toolchain that the Elk guys gave me. The only minor “oddity” was that I also needed to apply a tiny patch (also supplied by them) to the VST SDK that allows for ARM64 builds - I’ve been assured that this it will be already included in the next official VST SDK version. I just used SSH to finally transfer files to the SD card without problems.

Next time I’ll talk about how I managed to run and control the plugin on the board.